Artificial intelligence (AI) is rapidly transforming how we work, communicate, and make decisions but concerns around data privacy have never been more pressing. The rapid rise of AI assistants such as Microsoft Copilot, OpenAI’s ChatGPT, and Google Gemini has brought powerful capabilities to our fingertips but at what cost to our personal and organisational data?

This dilemma is often referred to as the privacy paradox: we demand privacy, yet willingly share our data for convenience, personalisation, and productivity. As AI becomes more embedded in our daily workflows, understanding how these platforms handle our data is essential.

Understanding the Privacy Paradox

The privacy paradox is a term that was coined by Barry Brown at HP in 2001 to describe the contradictory behaviour of users who claim to value their privacy while continuing to use online products and services that collect, and sometimes share or sell, their personal data. We willingly do this in exchange for a smoother more convenient experience or simply because we want something (free trial or offer) and are willing to hand over our personal details in exchange for it.

AI intensifies this paradox. To deliver personalised responses, AI tools need context often drawn from our emails, documents, chats, and search history. But the more context we provide, the greater the risk of data misuse, breaches, or unintended exposure.

AI has an insatiable appetite for extensive personal data to feed its machine-learning algorithms has raised serious concerns about data storage, usage, and access. Where is this data coming from? Where is this data stored? Who can access it? And under what circumstances?

AI Regulation and Legislation

Ireland and the EU are taking a strong stance on AI governance, making it crucial for businesses to chose tools that respect privacy by design.

The EU AI Act

The EU AI Act came into force in on the 1st of August 2024 and will be fully applicable on the 2nd of August 2026, with some exceptions. This is the fist comprehensive legal framework for AI globally. Key points include:

- Classifies AI systems by risk levels (minimal, limited, high-risk, prohibited).

- Imposes strict obligations on high-risk systems (e.g., in HR, law enforcement, financial services).

- Requires transparency, human oversight, and data governance controls.

- Companies must prove their AI systems are compliant or risk heavy penalties (up to €35 million or 7% of global turnover).

AI Platforms in Focus

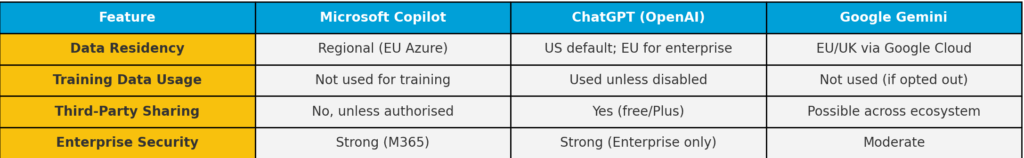

Let’s examine how three leading AI platforms, Microsoft Copilot, ChatGPT, and Gemini, approach data privacy and security.

Microsoft Copilot

- Data Residency: Copilot inherits Microsoft 365’s commitments. Data remains in your organisation’s region via Azure, supporting GDPR and other compliance frameworks.

- Security Framework: It inherits Microsoft’s enterprise-grade security, including encryption, access controls, and compliance certifications.

- Training Data: Microsoft explicitly states that customer data is not used to train foundational models.

- Third-Party Sharing: Data is not shared with external parties without explicit consent.

Verdict: Strong privacy posture for EU businesses—especially those using Microsoft 365 Business Premium.

OpenAI ChatGPT

- Data Usage By default, data from free-tier and ChatGPT Plus users may be used to train OpenAI’s models. This can be disabled in settings.

- Memory Feature: “Chat history” can be turned off, but memory (where the model remembers facts between chats) may still raise concerns around data retention.

- Data Residency: EU-based storage is available for enterprise customers but not guaranteed for all users.

- Enterprise Controls: With ChatGPT Enterprise or Teams, no customer prompts or data are used to train models, and data is isolated with encryption and audit controls.

- Data Residency: OpenAI offers EU-based storage via Microsoft Azure, but this is primarily available to enterprise clients.

Verdict: Suitable for general use, but free/Plus versions are risky for confidential or regulated environments.

Google Gemini

- Retention Controls: Users can choose how long their data is stored, ranging from 3 to 36 months and delete activity manually.

- Training Transparency: Google states Gemini “does not use your prompts to train models” unless you’ve opted in via Google Workspace Labs, though details remain vague.

- Data Residency: EU/UK support is available for Workspace customers via Google Cloud, with options for data sovereignty.

- Third Party–Sharing: Some data is processed across Google’s ecosystem, raising questions around cross-service access.

Verdict: Improving but lacks the transparency of Microsoft’s enterprise model.

Comparative Analysis

Implications for Users and Businesses

Data privacy is always a hot topic and while users voice concern, the reality is vastly different. Most will continue to blindly accept cookies, fill in forms for freebies (nothing is really free) and tick ‘agree’ boxes without reading the fine print because, convenience wins.

For individual users, the privacy paradox is a personal trade-off. Do you want smarter suggestions at the cost of sharing your data? For businesses, especially those in regulated sectors like finance, healthcare, or government, the stakes are higher. Data breaches, non-compliance, and reputational damage are real risks.

Choosing an AI platform should go beyond features and performance. It must align with your organisation’s privacy values, legal obligations, and risk appetite.

Conclusion

As AI becomes more capable, the tension between convenience and control will only grow. Among the leading platforms, Microsoft Copilot currently offers the most robust and transparent privacy framework, particularly for enterprise users and those operating under GDPR.

Ultimately, the question isn’t just what can AI do for you? but what are you willing to give up in return?

Our consultants can help you implement Microsoft Copilot securely and compliantly – tailored to your unique environment.

Book a free consultation and take the first step toward privacy-conscious productivity.

Get in Touch: hello@it.ie