In an era marked by rapid advancements in AI technology, innovation dances on the edge of a double-edged sword. Picture, if you will, an angel and a devil perched upon your shoulders. The angel whispers of the boundless potential and ethical uses of Generative AI, heralding a future of convenience and progress. Conversely, the devil murmurs temptations, exploiting these same technologies for deceit and fraud. This metaphor captures the duality of digital advancement: the line between beneficial and harmful uses of AI, is increasingly blurred. This article ventures into the shadowy realm of AI driven voice cloning scams offering insights into safeguarding yourself and your business against these criminal exploits.

Understanding the Threat

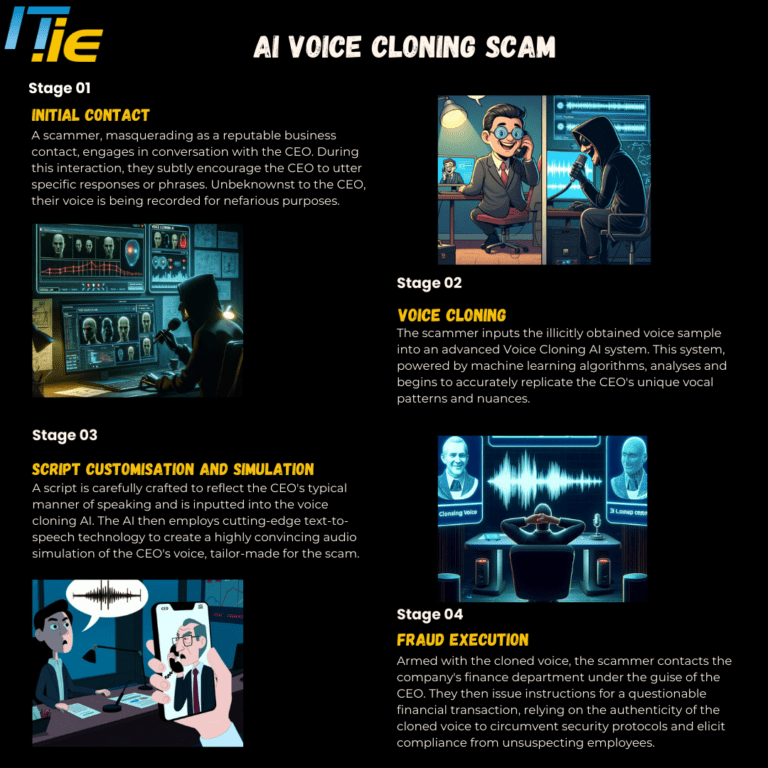

AI voice fraud, a sophisticated form of social engineering, manipulates trust by mimicking the voices of those you know, such as a family member, a public figure, or a work colleague. Unlike traditional scams, which cast a wide net hoping to catch the unaware, voice cloning scams target with precision, using familiar voices to bypass your defences.

Imagine receiving a call that chills your blood — a voice, indistinguishable from a loved one, caught up in an emergency demanding immediate financial aid. It could be a grandchild in hospital who needs to pay a medical bill or a child screaming that they’ve been kidnapped with the kidnappers demanding immediate ransom payment. These aren’t hypothetical scenarios but actual reported examples of cloned voice scams from across the globe.

Now let’s take the familiar CEO scam where a junior employee, often someone in the accounts department receives an urgent email from the boss demanding that a payment be made immediately to an account or sensitive information be sent to a given email address. This type of scam remains effective as it plays on the fears of the junior employee questioning the boss. There is, however, an improved general awareness of this type of scam, particularly in organisations that have implemented awareness training, with employees now encouraged to question unusual demands that purport to come from someone in authority. But what happens if instead of an email, the boss calls you and makes the same request or demand. It’s his or her voice and you don’t see any reason to question this. Would you now carry out the demand?

Harvesting Voices: The Scammers' Methodology

Scammers procure voice samples for cloning through a variety of cunning methods, each designed to exploit the ubiquity of digital communication. One common approach involves extracting audio from publicly available sources, such as videos, podcasts, or social media posts where the target has spoken. Another more direct tactic involves the scammer contacting a senior figure at an organisation by phone under the guise of a legitimate business partner and where they may attempt to prompt the target into speaking certain phrases. This will allow them to better train the AI and create a more realistic clone of the targets voice. The advancements and widespread availability of AI voice cloning technology exacerbate these risks. OpenAI’s development of the Voice Engine, capable of creating a synthetic voice from just a 15-second sample, epitomises the technological strides made in this field. Despite OpenAI’s cautious approach to release, numerous other platforms offer voice cloning capabilities with varying degrees of realism, many of which are accessible as paid or open-source solutions. These advancements have transformed voice cloning from a novelty into a tool readily exploited by nefarious actors, compelling us to scrutinise every interaction that isn’t face to face with renewed scepticism.

A Close Call: Eamon Gallagher's Brush with AI Voice Cloning

The following section features a real-world example provided by our founder and Managing Director, Eamon Gallagher, drawing on a recent firsthand experience of this threat.

“It was around Midday on Friday 12th April. It had been a long week and because I had spent the two previous days meeting clients, I was working hard to win back control over the inbox. My phone rang and it was from an unknown Irish landline number. The caller explained that they were looking to speak with the CEO and their demeanour, although reasonably professional seemed somewhat rushed and aggressive. The caller asked me to confirm my full name and the company I worked with. I didn’t. I asked him to confirm who he was, and he said that he was calling on behalf of an international Business Awards and that IT.ie had been nominated in three categories. If it hadn’t been for his previous tone, I think I may have believed him, despite not knowing if there was such an event.

He then went on to read out a statement of what we do, what type of business we are and the services we provide. Next, he asked that I read back what he said word for word. It was at this point, some sort of subconscious instinct kicked in and I responded with an almost incoherent hushed voice. The next few exchanges, comical as they seem now, were a back and forth of the caller getting more and more frustrated as he dealt with someone who had somehow lost the ability to string two words together mid conversation.

Without any doubt, this was an attempt to capture my voice for a potential voice-cloning scam. This was my first direct encounter with this new type of AI-driven scam, and while I hope it will fade into obscurity, the reality is that it will persist and evolve. Given the rapid advancements in AI technology, these scams are expected to become even more sophisticated and convincing. In the future, we might find ourselves questioning the authenticity of every interaction that doesn’t occur face-to-face”.

Recognising the Red Flags

Be wary of unsolicited calls that push you towards articulating certain responses or revealing sensitive information. A red flag should rise when the caller, under the guise of verification or urgency, insists on hearing specific phrases or sentences. Such interactions often feel jarring, as if the context doesn’t quite align with the nature of your relationship with the supposed caller or the norms of professional communication.

The infographic below looks at the various stages of an AI Voice Cloning Scam.

Proactive Measures to Protect Yourself and Your Business

- Educate Yourself and Your Team: Awareness is the cornerstone of defence. Regular training sessions can keep you and your staff informed about the latest scamming techniques and how to recognise them.

- Implement Verification Procedures: Adopt multi-step verification for sensitive requests. For example, use a daily keyword or security questions that a scammer couldn’t possibly know.

- Limit Information Online: Scammers often gather personal information from social media or company websites to make their impersonations more convincing. Limit the amount of personal and sensitive business information you share online to reduce this risk.

- Establish a Clear Protocol for Financial Transactions: Create and enforce strict protocols for financial transactions, including who is authorised to request and approve them. Ensure that these protocols include verification steps that cannot easily be replicated by AI.

- Be Sceptical of Caller ID: Teach your team that caller ID can be spoofed and to verify suspicious calls through a separate, secure channel.

- End the Call: This might go without saying but if you suspect that a caller is trying to harvest your voice, don’t engage and end the call immediately.

Conclusion

Voice cloning scams represent an emerging and rapidly advancing threat. While malevolent actors might exploit AI technology for illicit purposes, defenders are also leveraging AI to combat these risks. In the United States, the Federal Trade Commission (FTC) recently announced the victors of the “Voice Cloning Challenge,” an initiative aimed at fostering innovative solutions to shield consumers from the misuse of AI-enabled voice cloning technologies. As threats continue to evolve, it’s expected that protective measures will also advance, albeit with some delay. However, it’s crucial to acknowledge that no solution offers absolute protection. The most effective defence comprises a high level of vigilance, a robust arsenal of cybersecurity tools, and a healthy dose of scepticism.